阅读笔记 - (NSDI'22) Ekya Continuous Learning of Video Analytics Models on Edge Compute Servers

作者、优点和问题

- 问题

- 可否结合 serverless 进来,做 edge serverless continuous learning? => scale to zero 之后冷启动 load 数据的时间会变得很长,如果有一些预处理和预加载就会好很多了

- 参考文章:EAVS: Edge-assisted Adaptive Video Streaming with Fine-grained Serverless Pipelines

- 参考文章:Serverless empowered video analytics for ubiquitous networked cameras

- 可否重用 cached history models?

- 可否结合 serverless 进来,做 edge serverless continuous learning? => scale to zero 之后冷启动 load 数据的时间会变得很长,如果有一些预处理和预加载就会好很多了

- 优点

- 算是针对 Continuous Learning of Video Analytics Models 中进行 GPU 资源分配的开山作品!

Introduction

- 数据漂移

- 解决方案 1:模型压缩

- 解决方案 2:持续模型重训练

- retraining window,

- tradeoff between the** live inference accuracy** and **drop in accuracy **due to data drift

- 如果可以给更多的资源进行训练,那么更新的模型就可以更快的上线交付使用。如果在推理集群中拿走资源可能会降低推理的准确性【因为为了更好的服务质量,需要计算资源进行预处理】

- 论文问题和挑战

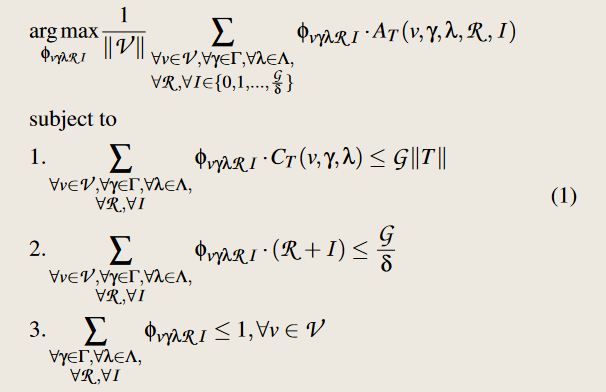

- 决策变量:在每个 retraining window 中,决策哪些 edge model 需要被重训练; 在重训练任务和推理任务中分配 GPU 资源; 针对重训练和推理任务选择配置方案

- 目标:最大化 retraining window 中的平均推理 acc

- 挑战:

- Different from (i) video inference systems that optimize only the instantaneous accuracy (ii) model training systems that optimize only the eventual accuracy

- The** decision space is multi-dimensional **consisting of a diverse set of retraining and inference configurations, and choices of resource allocations over time.

- It is difficult to know the **performance **of different configurations (in resource usage and accuracy) as it requires actually retraining using different configurationsI

- 论文的贡献

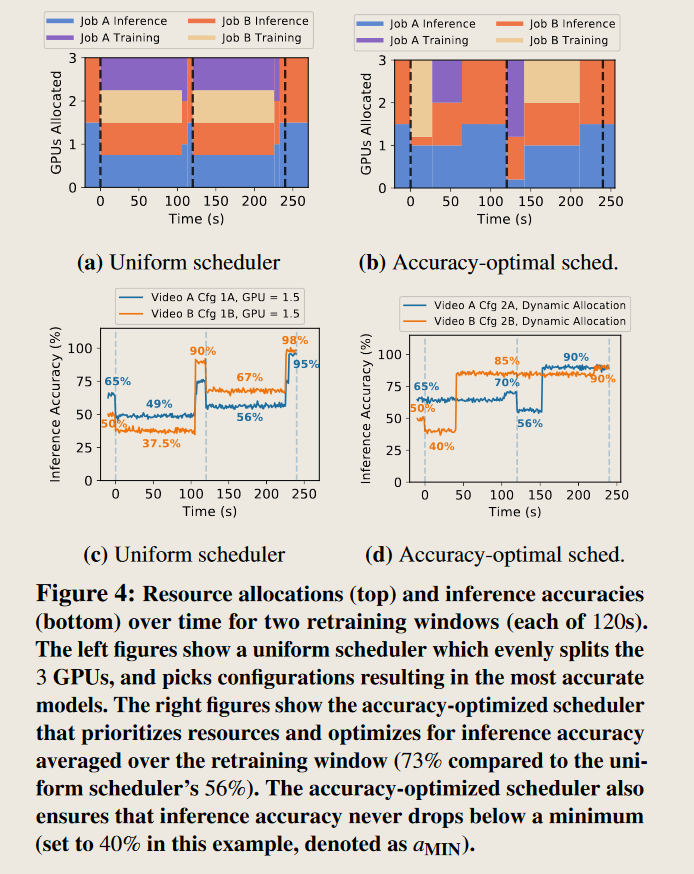

- resource scheduler:(1)GPU 分配按照预先定义的粗粒度分数进行分配; (2)不会在 retraining 阶段去改变分配方案,避免了更复杂的场景; (3)micro-profiler 可以进行剪枝

- micro-profiler: 测量分配 100% 的 GPU 的时候每个 epoch 的 retraining 持续时间,并根据不同的 epoch、allocation 和 training data size 进行缩放。

Continuous training on edge compute

2.1 Edge Computing for Video Analytics

- 在视频分析任务中采用边缘计算的原因:

- Uplink networks to the cloud are expensive

- Network links out of the edge locations experience outages

- Videos often contain sensitive and private data that users do not want sent to the cloud

2.2 Compressed DNN Models and Data drift

- Data drift

- 如果使用模型压缩会出现问题

- Continuous training

- 本文对 iCaRL 进行了改进,用于适配 changing characteristics

2.3 Accuracy benefits of continuous learning

- 切分 Cityscapes 数据集到固定的 retraining windows 中 => 其实本质上就是 task 划分 => 突出一个 non-iid 场景

- 本质上还是在做 CIL??

3 Scheduling retraining and inference jointly

3.1 Configuration diversity of retraining and inference

3.2 Illustrative scheduling example

4 Ekya: Solution Description

4.1 Formulation of joint inference and retraining

4.2 Thief Scheduler

- 问题太复杂了,解耦成 resource allocation (i.e., R and I )和 configuration selection (i.e., γ and λ)

6 Evaluation

- 比较方案:重用 cached history models

8 Related Work

- ML training systems

- Video processing systems

- 所有这些工作仅优化 DNN 推理的推理精度或系统/网络成本,这与 Ekya 专注于再训练不同

- LiveNAS[41]部署连续的再训练来更新视频升级模型,但重点关注有效地将客户端服务器带宽分配给单个视频流的不同子样本。相反,Ekya 专注于 GPU 分配,以最大限度地提高跨多个视频流的重新训练精度。